Although Alexa is a voice first experience – skill developers have always had the ability to add UI to their responses in the form of cards or render templates.

These tools have been a useful way of displaying supplementary information, but they’ve always been relatively static – an add-on rather than part of the experience. As Alexa is now appearing in more and more places, in more and more formats and environments, the Alexa team recently announced that skill developers would be able to create true multimodal experiences with the release of the Alexa Presentation Language (or APL, as we’ll call it in the rest of this post).

Okay – so what is APL?

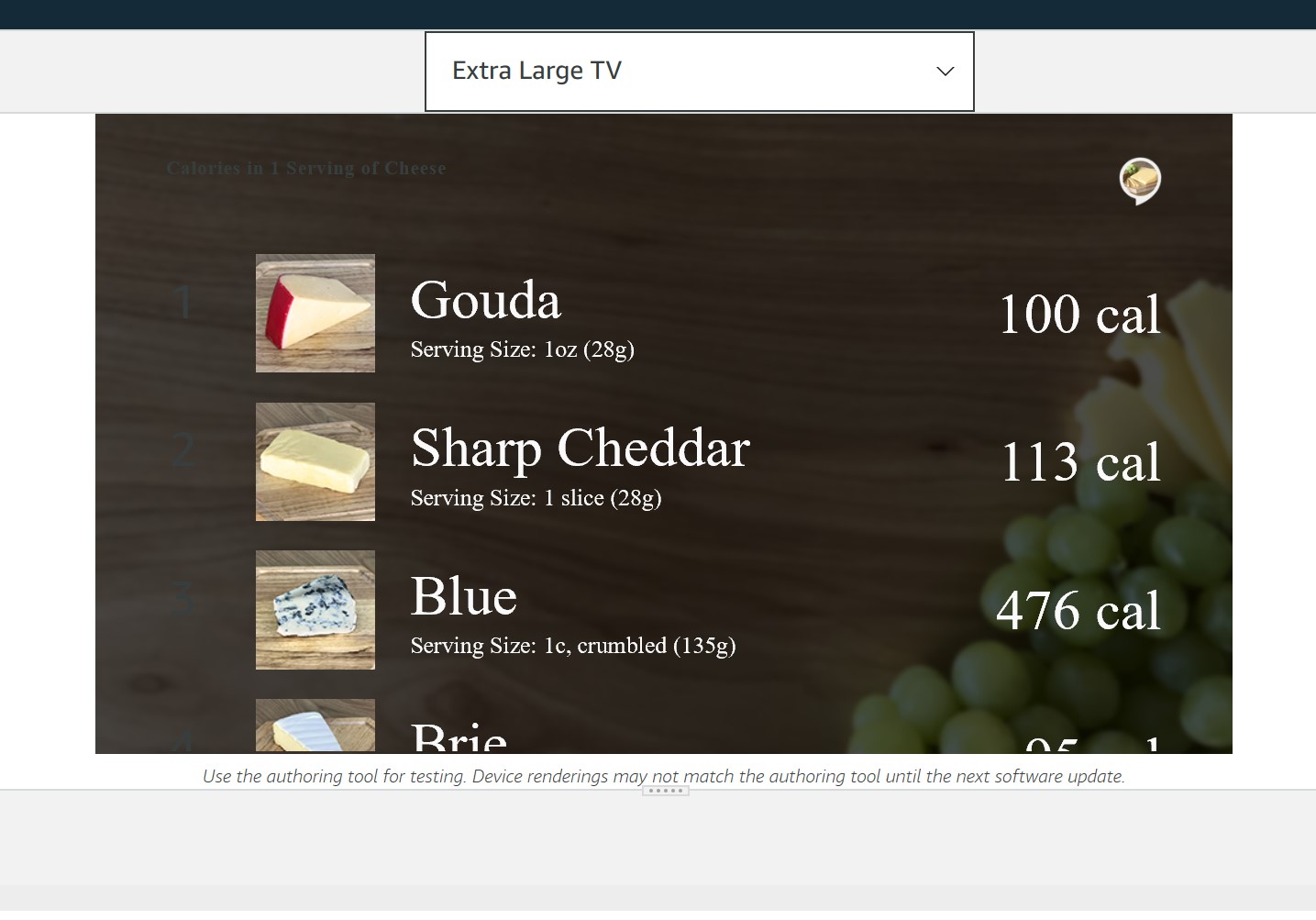

APL is a new JSON format that allows you to describe the visual aspect of a skill. The rendered content, or document, is made of nested components that work together with accompanying data to give a rich visual experience. These components can be specified to work in particular displays – so that everything from an Echo Spot to a large screen TV are able to render the correct content for the user experience.

Now Alexa is still voice first, so whatever you can do with the display has to be available via a voice only request/response – but sometimes the amount of data involved or the amount of time it would take to inform the user of that information means that a visual display is best suited where possible.

The full specs for APL is available in the (as always) detailed technical documentation

So I can do visuals, what’s that got to do with Karaoke?

So as you go through the list of components you can see text and images and lists and pagination, but then one of the features that immediately shows you how powerful this new format can be is the ability to sync text and speech together – in the same way a Karaoke song might – so that it highlights and scrolls through the text piece by piece. Here’s the basic example I’m going to work through in this article. This was created pulling together a series of sentences pulled together by copying and pasting from Riker Ipsum, but the content itself can be anything you want. To get the full effect you just need to make sure the content is long enough (or the font big enough) to need to scroll on whatever display you’re using.

Assumption on Setup

If you’re looking into APL, I am expecting that you’ve done a little work with Alexa skills already. If not – I’d recommend going to the recently revamped Alexa.NET readme on GitHub, where it goes through the basics of setup, getting data from requests and sending responses.

Creating the Layout

Yes, I know I said APL was all about sending these rich documents down to the devices. But there’s no point sending down a document without a UI, so we’re starting with the components we want to render – which means we create a “Layout”.

As the name suggests a layout is a combination of components that fit together to make up a visual display. Every document has a “main” layout, which is the primary UI the device will render on screen, but you can also reference other layouts for common reusable pieces (The alexa team have released a standard header and footer, for example).

So within Alexa.NET this is all dealt with inside the Alexa.NET.APL NuGet package and namespace. We’re going to keep our layout really straight forward.

- ** Container** – can have one or more child components

- Scroll view – lets the contents inside it scroll, we’re putting this in the container so we can have it only take up half the screen, which makes the sync move obvious.

- Text – the stuff we want to be all karaoke about

- Scroll view – lets the contents inside it scroll, we’re putting this in the container so we can have it only take up half the screen, which makes the sync move obvious.

And in code, that looks like

var sentences = "Smooth as an android's bottom, eh, Data? About four years." +

"I got tired of hearing how young I looked. " +

"Captain, why are we out here chasing comets? " +

"Fate protects fools, little children and ships named Enterprise.";

var mainLayout = new Layout(

new Container(

new ScrollView(

new Text(sentences)

{

FontSize = "60dp",

TextAlign = "Center",

Id = "talker"

}

){ Width = "50vw", Height = "100vw" }

)

);

Great – now lets see it on the screen.

Sending the document

To send the layout we add a directive to a standard response. You’ll notice this is being added to an empty response – this is because we know we’re dealing with a display and we know that the display (APL) is going to be handling the text and speech in this scenario.

In a real skill, you’d want logic (probably a different Request Handler) to ensure that devices without a display were getting a standard spoken response.

var response = ResponseBuilder.Empty();

var renderDocument = new RenderDocumentDirective

{

Token = "randomToken",

Document = new APLDocument

{

MainTemplate = mainLayout

}

};

response.Response.Directives.Add(renderDocument);

return response;

Okay. So this gives us the following:

So now we have the text – we need to get the speech wired up.

So about that text…

Okay. So we have text in a variable and we have it rendered on screen. But to get it all nicely sync’ed up we need to get Alexa to speak it.

For that to work we have to stop thinking of that text we’ve rendered as text. It’s not text any more. It’s a script. We’re determining what Alexa is going to say and how she’s going to say it. So actually – to make this more obvious – we’re going to turn it into a Speech object (part of the main Alexa.NET library to generate SSML). Yes we’re keeping it simple, but you could add emphasis, you could add one of the recent polly voices to be a different voice, all sorts of things.

var speech = new Speech(new PlainText(sentences));

Okay…so it’s SSML now…what does that give us? Well it gives us a format which can be converted into both audio and text. And that’s what we’re going to do. And for this, we’re going to move it all into a data source

Data Sources

So a data source is part of the main APL Document, and allows our components to reference data externally, making them more reusable. So underneath our layout, we’ll add a new ObjectDataSource to our document which contains our script

var directive = new RenderDocumentDirective

{

Token = "randomToken",

Document = new APLDocument

{

MainTemplate = mainLayout

},

DataSources = new Dictionary<string, APLDataSource>

{

{

"script",new ObjectDataSource

{

Properties = new Dictionary<string, object>

{

{ "ssml", speech.ToXml()}

}

}

}

}

};

Now we’ve moved the ssml to the data source, we’re able to use special objects called Transformers (no robots, just code!) which manipulate information within a data source into a different format, and places that new format in a new property. We’re using two:

- SSML to Text: Creates a plain text version of your script

- SSML to Speech: Creates an audio output of your script

Using these two transformers together, you’ll end up with the two formats of your script Alexa needs to perform the Karaoke. So the full data source looks like this:

DataSources = new Dictionary<string, APLDataSource>

{

{

"script",new ObjectDataSource

{

Properties = new Dictionary<string, object>

{

{ "ssml", speech.ToXml()}

},

Transformers = new List<APLTransformer>{

APLTransformer.SsmlToText(

"ssml",

"text"),

APLTransformer.SsmlToSpeech(

"ssml",

"speech")

}

}

}

}

There we go. Using the ssml propery, the transformers will create a “text” and “speech” property for us to use. The next step is to alter our Layout so that it can use these new fields.

Binding the Main Layout to the Data Source

Layouts can access data through the use of parameters. These parameters are declared against the layout, and then the data is sent as part of the layout definition.

The main layout in an APL document is sent one parameter – “payload”, and that maps to the DataSources mappings we set up.

So to allow this to work, we have to add a “payload” parameter to our layout object

mainLayout.Parameters = new List<Parameter>();

mainLayout.Parameters.Add(new Parameter("payload"));

Now we tell the text object how it’s going to work:

The text property is going to use the “text” from the data source

The audio from the data source will go against a “speech” property.

We do this using the APL data binding syntax

new Text(sentences)

{

FontSize = "60dp",

TextAlign = "Center",

Id = "talker",

Content = APLValue.To<string>("${payload.script.properties.text}"),

Speech = APLValue.To<string>("${payload.script.properties.speech}")

}

Brilliant! We’ve started using a data source, we’ve transformed it into text and audio, we’ve data bound the APL component – we’re rocketing forward in terms of APL usage! We deploy the code and run the skill and….

What the?! IT LOOKS EXACTLY THE SAME! You mean to say we’ve done all that work, added several more pieces of complexity… and it’s done nothing different?!?!?!

Well…there is one last thing

Telling Alexa to Say it

You see all we’ve done is wire up the data to make it all possible. The fact of the matter is you could have more than one piece of text and speech on screen and so unless you tell Alexa to say something, she’s going to sit there silently waiting for direction.

But don’t worry – it’s just a few extra lines. Once you’ve added the render document directive, you need to add a second directive. This one is called the ExecuteCommandsDirective. It allows a skill to send a command to the APL document and run in the current layout.

Our command is called SpeakItem. Given the name of a component Alexa will use the Speech property of a component and say what’s there. We’re also going to ask for the tracking to be done line by line to give it that karaoke feel.

You’ll notice the directive has the same “randomToken” token value that we added to the APLDocument. These have to match and would normally be something more appropriate to ensure the command is being used against the correct instance of the layout.

var speakItem = new SpeakItem

{

ComponentId = "talker",

HighlightMode = "line"

};

response.Response.Directives.Add(new ExecuteCommandsDirective("randomToken", speakItem));

So now? Now if I publish it?

Yes! Now if you publish it, it will work, because you’ve got the script in the audio and the text, and Alexa has been explicitly told to talk about it. Well done!

Now I know this seemed like a lot of steps, but text to speech sync’ing is probably the most involved process I’ve seen so far with APL, and when you think about we’ve touched on a lot of topics to get here from nothing. Documents, components, commands, data sources, transformations, it touches a lot of topics to built it all up – and shows just how powerful APL can be.

Try out some simpler layouts, play around with it, and see what you can achieve. APL is a big thing, and can make a huge difference to how engaging your skills can appear.