Okay, so you’ve survived your application’s monolith phase. But it left you jarred and cynical. You understand that by breaking this monstrosity down into smaller services it should be more maintainable, easier to change, faster to innovate. Microservice all the things!

And it makes sense, it’s good. You have all these little web apps making their little HTTP requests and lots of little requests make up some big functionality. They’re zipping this way and that and you’re tweaking them and versioning them (you’ve got versioning right? Otherwise that breaking change is really gonna break!) and they’re talking to one another at scale and it feels like you’ve turned a corner.

Then someone on another team, outside of your beautiful microservice world, starts talking in a slightly more panicked tone. A colleague joins them and they both start typing furiously. Then they sit up and glare in the direction of your team….and you don’t understand. Sure you rely on their system for one of your services, but your microservice takes the request coming in and asks their system for some data. You know the level of traffic you get, it’s fine, what could possibly go wrong?

The story so far

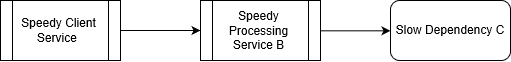

It all seemed straight forward. You had two services, and the external team’s system.

Your app makes an HTTP request to Speedy Client Service

It handles the caching and the app interaction, it has its job, so to keep it clean you isolated the actual system boundary in its own microservice, processing service B, and you make an HTTP call to that.

Service B is just a proxy, it doesn’t so anything particular clever. Handling calls to Dependency C, a requirement for the journey, it just waits for the request to complete.

Dependency C can be a little flakier than we’d like, so it’ll retry the request if it fails and map to a more microservice friendly structure.

They each have their own job, and as each is a microservice they agree a contract and don’t worry about what’s calling them. This all seems pretty okay. So why is the team angry?

Success is a 2xx status code

Well here’s the thing. C is a little slow today, actually it’s a little slower than usual, and what that’s meant is that the code we’ve got in our processing service? It’s timing out.

Now we know C can be a little flaky, we allowed for this, so our code spots the call failing and retries.

While we’re flowing through our retry logic, unknown to us, our client service decides something’s gone wrong with us because we’re taking too long – so its call times out and now – in the search for that elusive 2xx status code – it retries the HTTP call to us.

And this is an important call, and the app now things the main client service is timing out. But that’s got a cache and stuff, so we can just hit it again.

So…we have the app making multiple calls to the client service

Each turning into multiple calls to the processing service

Which in turn makes multiple calls to the dependency service

Which is just sat there, now with a cascade of calls, just trying to do its job and handling ALL the calls – except now what should have been a 1 to 1 call ratio is turning into 1 to x, and we’re hammering their system. Hence the glares.

HTTP boundaries, not all the things

So we liked HTTP as the way we wanted our services to communicate. When we split up our monolith it gave us easy caching, well understood return codes and human readable requests. It made perfect sense. Didn’t hurt that it also meant making apps and websites that could talk to it super easy either!

And we knew we wanted each of our components to scale, release and be maintained independently, so each of them became a microservice. We agreed contracts between them and that was that.

“HTTP is the way we wanted our services to communicate”

“We wanted each of our components to scale…independently”

Do you see the difference? When you’re in the world of stories and release cycles it’s easy to miss. A component isn’t the same as a service. To maintain your contracts and your ability to communicate with multiple clients you absolutely need a point of entry that is contracted and controlled. That boundary is ideal for something like HTTP.

Behind that entry point can be multiple components, a unit of work that you want to scale independently. But if its only be spoken to by other components, then you can use whatever communication process is fit for purpose. Sometimes that’s still HTTP, but you should feel free to have the choice.

In this scenario, slow dependency C, it seems like it can’t scale as well as we can, can’t handle the load. So maybe our component should be run from an external queue? That way we can throttle the number of simultaneous requests that occur, potentially restart our service, and still keep track of all the outstanding requests.

Yes, you then have an asynchronous process, but chances are your HTTP request was async anyway right? So swapping your web request for some other async process that waits for the answer to your queue..is that that different? You’re just waiting still.

Anyone who’s dealt with integrating with Slack will know they do things a bit differently too. You have to return your initial response within a few seconds, to ensure that the message has been received, but you’re sent a second url as part of the payload which you post to once the request is successful.

Received and successful aren’t the same thing – and in this situation both are handled by HTTP (oh look – they’re both boundaries with your integration) but the system is patient. Let us know you understand and we’ll give you way more time, just need that small nod to start with – just to be sure (I really like this separation – feels clean)

Yes you lose a measure of control compared to raw request/response. You send out a request and trust you’ll get a response. But is that any different to requesting info from a web server and expecting it will return in time? You will still need to handle timeouts and other “unhappy path” situations, and its still within your control. Your clients may still retry – so if you can make your request idempotent then perhaps you can validate the request, or check to see if you’re still waiting for that response. There’s nothing that says one valid result from your queue won’t handle more than one pending request.

And this is just one scenario with a couple of options. Your solution may involve different ways of working again.

But request/response is so easy!

Yes, this approach is a little more complicated, and yes members on your team may have to learn some new architecture patterns. But development of a process is based on the reality in which you work – not the ideal you want to work in. And that team? The ones glaring at you? They’re your dependency – so being a good client for them is as important as being a good service to your own clients, otherwise its your service that starts to fail to deliver to your customers. Maybe HTTP is the right choice, maybe its not, maybe it is, but you offer a different way of interacting with it, maybe you can queue all the things.

The important thing is that you differentiate between a service and a component in order to broaden the options you’ve got and when you’re dealing with a boundary and when you’re dealing with internal communication.

Just remember that “fit for purpose” isn’t the same as “one size fits all”